Accessibility Audit Case Study: Zingerman's Deli Website

Digital Inclusivity & WCAG Compliance Assessment

Role

UX Researcher,

Accessibility Analyst

Duration

4 weeks

Team

Angelina Akdis, Zejun Li,

Mia Goldstein, Sarah Chaloult, and

Grace Bogle

Tools

WAVE (Chrome Extension),

aXe (Chrome Extension),

Manual Testing

Automated Testing, Manual Testing, Keyboard Navigation, Screen Reader Simulation, Visual Inspection, WCAG 2.1 AA Compliance Assessment

Project Overview

As part of a pro-bono team project, I conducted a comprehensive accessibility audit of Zingerman's Deli website, focusing on ensuring digital inclusivity for all users. Given that 13% of the American population experiences some type of disability according to Pew Research Center, this audit aimed to identify barriers that could prevent users from accessing Zingerman's food ordering system.

Context and Rationale

Zingerman's Deli has earned recognition as "one of the largest contributors to the Ann Arbor area's epicurean culture" and was named by Food & Wine magazine as "one of the top 25 food markets in the world." With such a reputation for high-quality food, their online ordering system should provide seamless and accessible experiences for all users, regardless of ability.

Website Audited: https://www.zingermansdeli.com/

My Primary Focus: Menu page analysis and cross-team collaboration

Methodology

Tools and Testing Approach

- Automated Testing: WAVE (Chrome Extension) and aXe (Chrome Extension)

- Manual Testing: Keyboard navigation, screen reader simulation, and visual inspection

- Pages Tested: Homepage, Menus, Order Online, and Catering pages

- Standards: WCAG 2.1 AA compliance guidelines

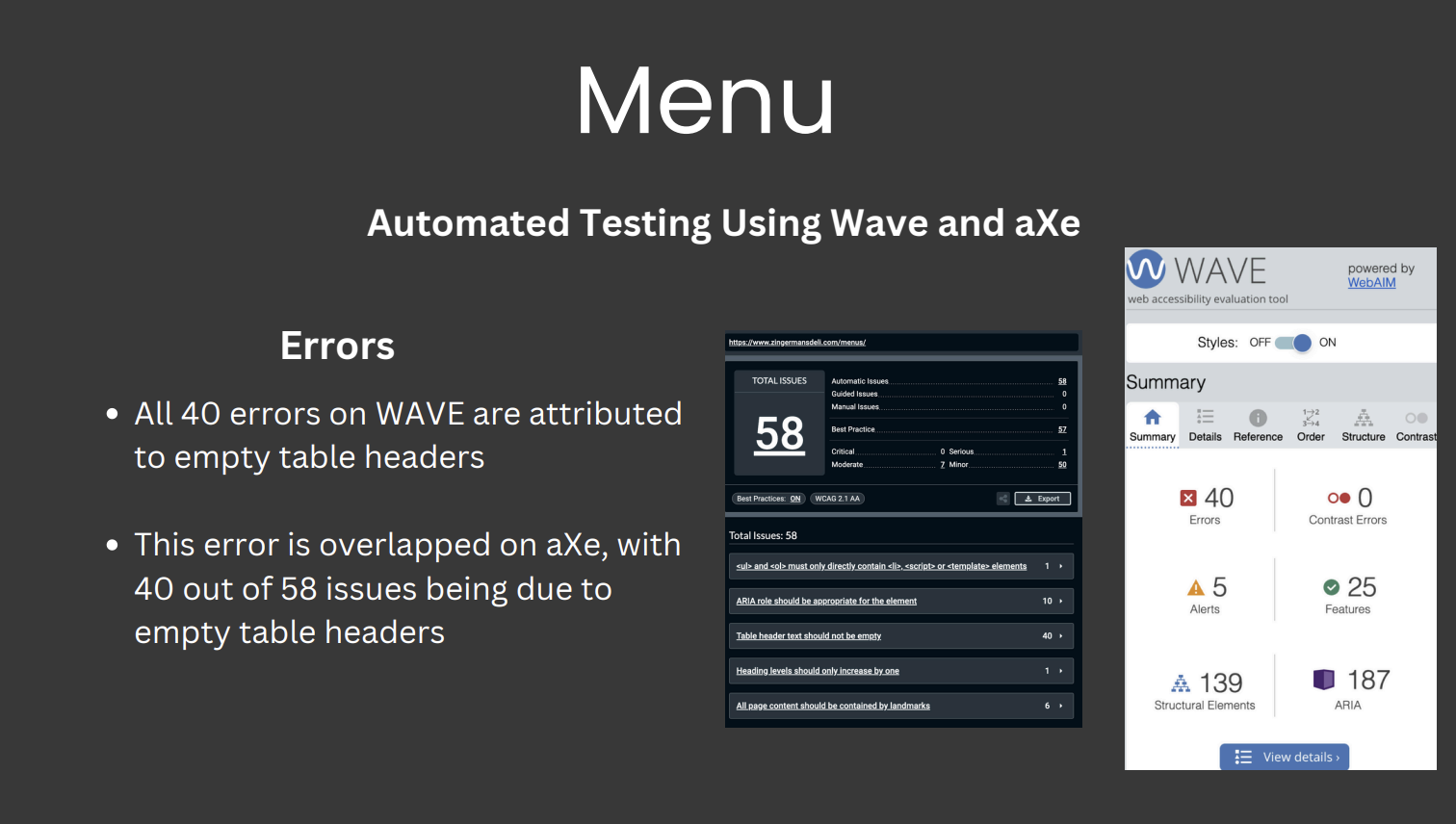

Menu Page Analysis

I led the comprehensive testing of the menu page, which revealed significant structural accessibility issues:

58 Total aXe Errors

Identified through automated scanning

40 WAVE Errors

Documented accessibility violations

Primary Issue

Empty table headers throughout the menu structure

Manual Testing Focus Areas

- Keyboard navigation functionality

- Image alt-text evaluation

- Link actionability assessment

- Color contrast verification

- Page scalability testing (200% zoom)

Key Findings and Issues Identified

Critical Site-Wide Problems

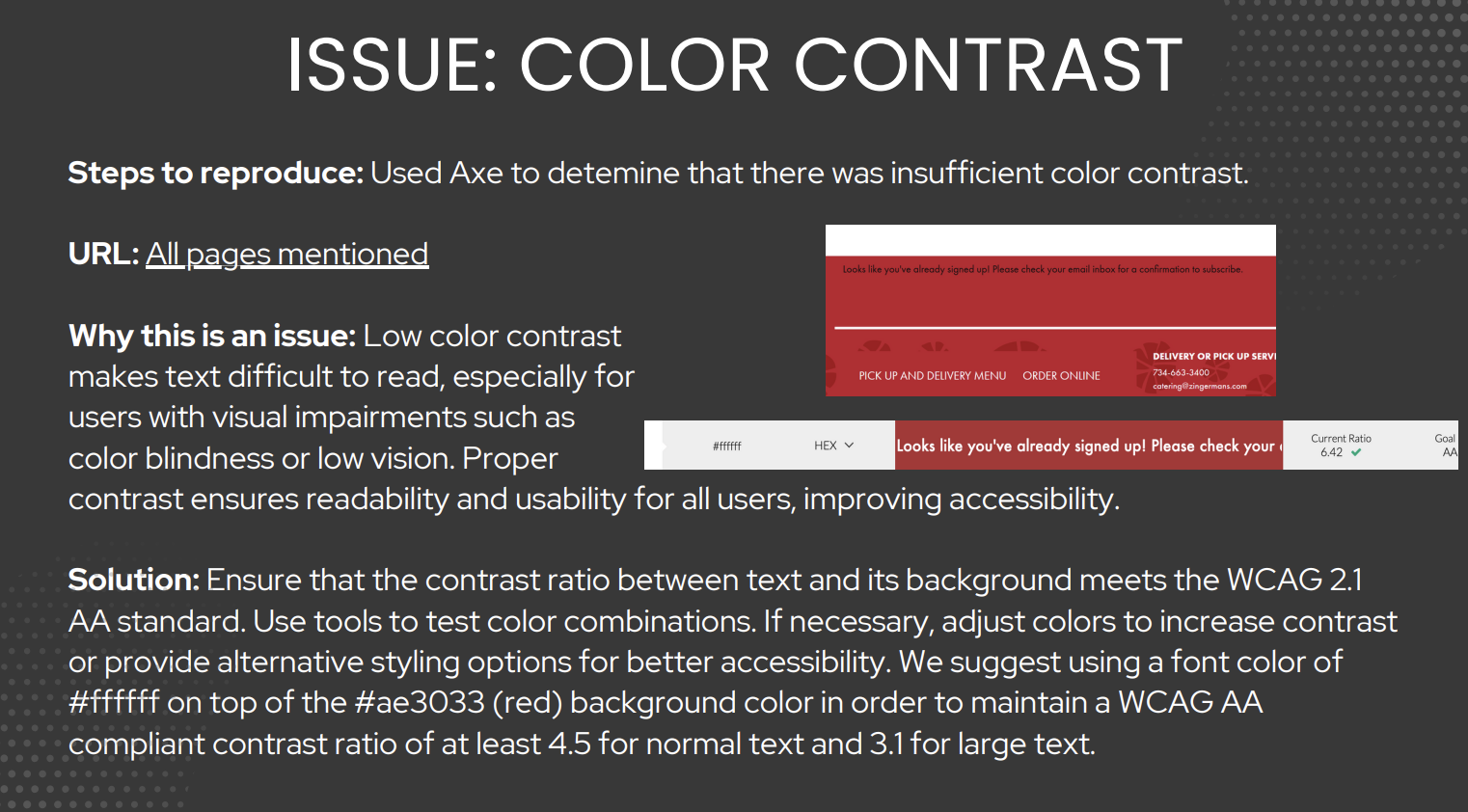

1. Color Contrast Violations

Issue: Insufficient contrast ratios across multiple pages

Impact: Creates barriers for users with visual impairments, color blindness, or low vision

Solution Proposed: Implement #ffffff font color on #ae3033 red backgrounds to achieve WCAG AA compliance (4.5:1 ratio for normal text, 3.1:1 for large text)

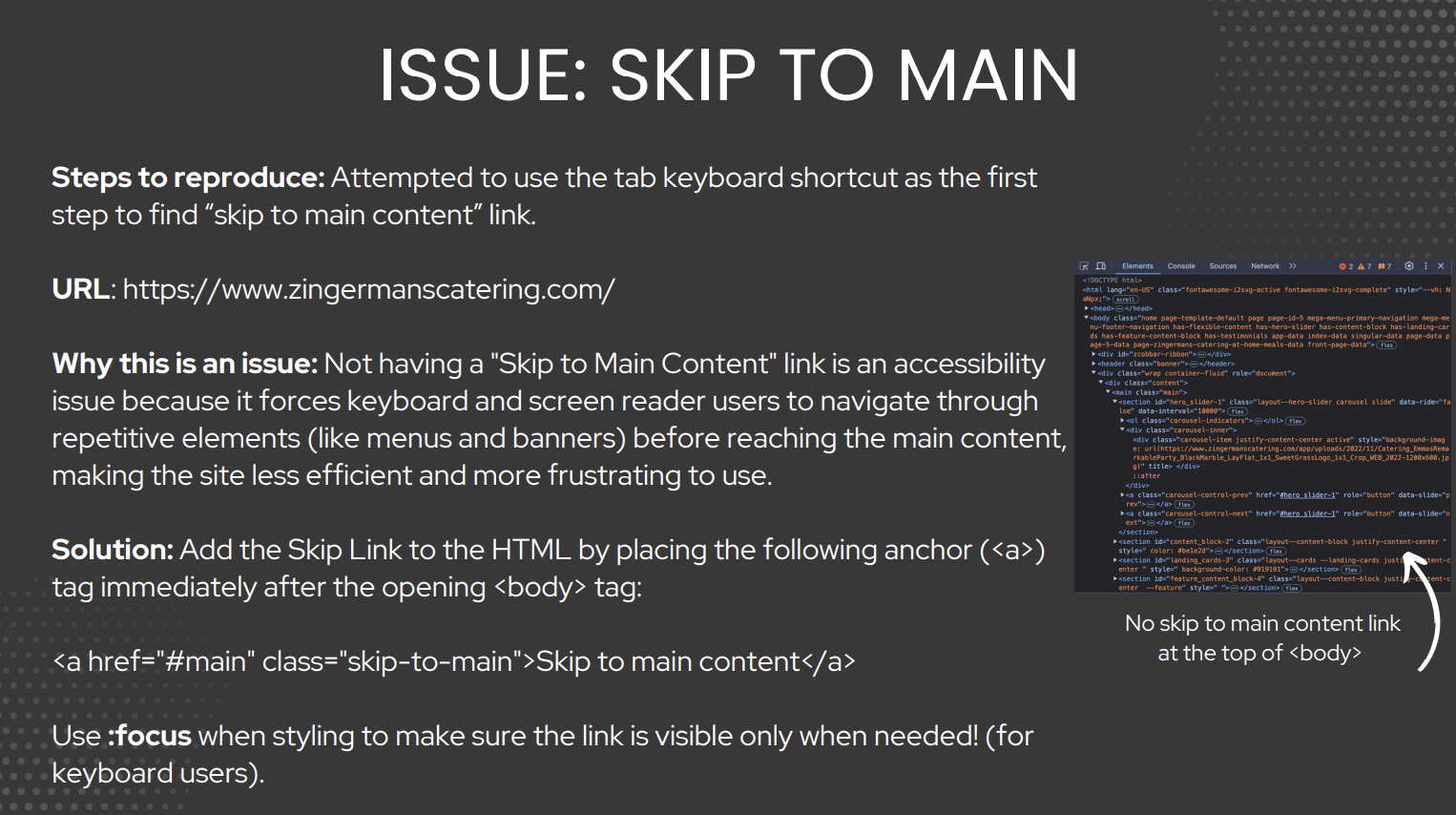

2. Missing Skip-to-Main Links

Issue: No skip navigation available on catering pages

Impact: Forces keyboard and screen reader users to navigate through repetitive elements

Solution Proposed: Add <a href="#main" class="skip-to-main">Skip to main content</a> immediately after opening <body> tag

Testing Process and Insights

Evolution of Understanding

This project fundamentally shifted my perspective on accessibility testing methodologies. Initially, I viewed automated tools as comprehensive solutions, but the experience revealed both their power and limitations.

Automated Testing Strengths

Efficiently identifies technical violations (color contrast, empty headers, missing alt-text), provides quantifiable data for tracking improvements, and ensures consistent application of WCAG standards.

Automated Testing Limitations

Cannot assess content quality or context appropriateness, misses usability issues that affect real user experiences, and provides limited evaluation of cognitive accessibility concerns.

Manual Testing Revelations

The manual testing phase proved crucial for understanding actual user impact. While our team successfully completed tasks using only keyboard navigation, the experience highlighted friction points that automated tools missed, such as unclear link purposes and inefficient navigation patterns.

Broader Accessibility Considerations

Unanswered Questions and Future Research

This project raised important questions about accessibility testing scope:

Cognitive Accessibility: Current testing methods focus heavily on visual and motor impairments, but how do we effectively test for cognitive overload or ADHD-related barriers? Pages with overwhelming content or poor information architecture can create significant barriers for users who process information differently.

- Digital Literacy: Accessibility extends beyond disability considerations to include users with varying levels of digital literacy across age groups. This connects to broader UX principles, particularly Nielsen's 10 usability heuristics.

- Testing Methodology Gaps: Our analysis may have missed barriers affecting users with different cognitive processing needs, highlighting the importance of diverse user testing approaches.

Impact and Recommendations

Immediate Actionable Solutions

- Color Contrast Remediation: Implement color palette meeting WCAG AA standards

- Navigation Enhancement: Add skip-to-main links across all pages

- Table Structure Cleanup: Provide meaningful headers for all data tables

- Alternative Text Improvement: Develop content guidelines for descriptive, contextual alt-text

Long-term Accessibility Strategy

User Testing Integration

Include users with disabilities in regular testing cycles

Automated Monitoring

Implement continuous accessibility scanning in development workflow

Cognitive Load Assessment

Develop methods for evaluating information architecture from cognitive accessibility perspective

Personal Learning Outcomes

This project reinforced that accessibility is not a checklist item but an ongoing commitment to inclusive design. The combination of automated and manual testing proved essential for comprehensive evaluation, and the experience highlighted how seemingly minor technical issues can create significant barriers for users.

The collaboration aspect was equally valuable, as different team members brought unique perspectives to problem identification and solution development. This approach better simulates real world accessibility challenges and solutions.

Technical Skills Developed

- Proficiency with WAVE and aXe accessibility testing tools

- Understanding of WCAG 2.1 AA compliance standards

- Manual accessibility testing methodologies

- Cross-browser compatibility assessment

- Documentation and presentation of technical findings to stakeholders

Key Reflections

Accessibility Testing Requires Multiple Approaches

Automated tools provide essential technical validation, but manual testing reveals the human experience. The combination of both approaches creates a comprehensive understanding of actual user barriers and friction points.

Team Collaboration Enhances Problem Discovery

Different team members identified unique accessibility issues based on their perspectives and testing approaches. This reinforced the importance of diverse viewpoints in creating inclusive digital experiences.

Technical Issues Have Real Human Impact

Seemingly minor code problems like empty table headers create significant navigation barriers for screen reader users. This project demonstrated how technical precision directly affects user experience and digital equity.

Accessibility is an Ongoing Commitment

The audit revealed that accessibility cannot be treated as a one-time checklist but requires continuous attention, user feedback, and iterative improvement throughout the design and development process.

View More Projects

Designing an Easy Rental Experience

Mobile UX design focused on reducing stress in rental communications through automated messaging and scheduling.

View Project

Patient Portal User Research & Insights

Healthcare UX research combining ethnographic interviews and quantitative analysis for digital medical portal optimization.

View Project

Improving Community Platform Usability

UX research and design improvements for neighborhood social platform, focusing on information overload reduction.

View Project